Uncategorized

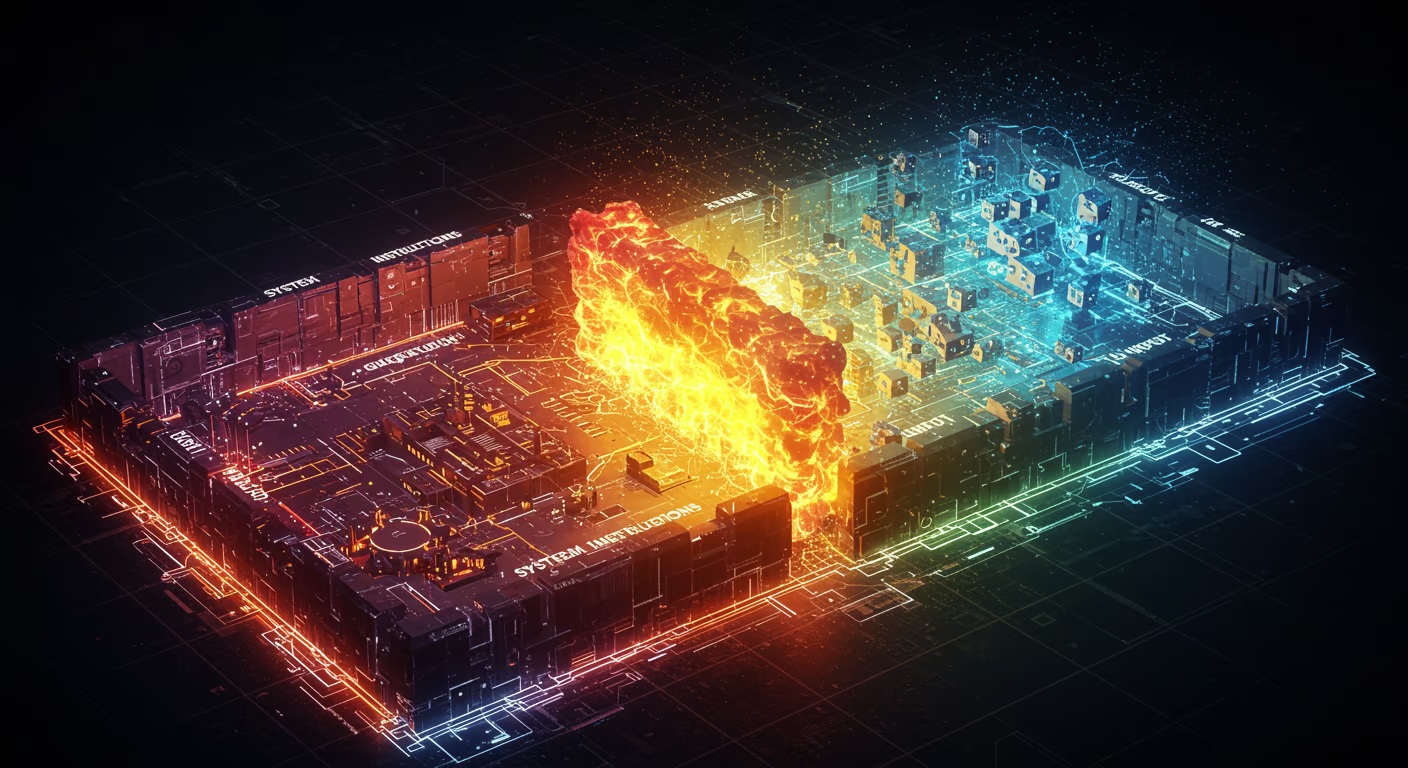

Prompt Injection Mitigation: DeepMind’s LLM Partitioning Strategy

Ever wondered how to shield AI from sneaky prompt hacks? Discover prompt injection mitigation through DeepMind’s innovative LLM partitioning strategy for unbreakable defenses. (152 characters)