Prompt Injection Mitigation: DeepMind’s LLM Partitioning Strategy

Why Prompt Injection Mitigation Matters in Today’s AI World

Have you ever wondered how a simple chat with an AI could turn risky? Prompt injection mitigation is crucial as large language models (LLMs) become everyday tools, powering everything from customer service bots to creative writing aids. These models, while revolutionary, can be tricked by sneaky inputs that override their intended behavior, potentially exposing sensitive data or causing unintended actions. Let’s dive into this growing challenge and see how DeepMind is leading the charge to make AI more secure.

Demystifying Prompt Injection Attacks and Their Dangers

Prompt injection attacks are a sneaky way attackers manipulate LLMs, embedding hidden commands in user inputs to hijack the system. Imagine a helpful AI assistant suddenly spilling confidential information because of a cleverly worded query—that’s the kind of risk we’re dealing with. Effective prompt injection mitigation isn’t just about fixing bugs; it’s about building a shield around AI to maintain trust and protect privacy in an increasingly digital world.

Everyday Techniques in Prompt Injection Attacks

Attackers use various tricks to exploit LLMs, like hiding instructions in plain sight or using special characters to bypass safeguards. For instance, they might embed commands in a long email draft that tricks the model into ignoring its core rules. Common methods include slipping in hidden directives, exploiting delimiters to sidestep system prompts, or overwhelming the model with repetitive text to break its constraints. What if your business relied on AI for data analysis—could these tactics expose your secrets?

Evaluating Current Strategies for Prompt Injection Mitigation

Many organizations are already tackling prompt injection mitigation with proven tactics, but they’re not perfect. Standard approaches include crafting tight system prompts to guide model behavior, sanitizing inputs and outputs to filter out threats, and using delimiters to separate safe instructions from user queries. While these help, savvy attackers keep finding ways around them, which is why we need more robust solutions.

For example, adversarial training exposes models to fake attacks during development, building resilience over time. Then there’s role-based access, which limits what the AI can touch, like restricting it to only approved data sources. Still, as one security expert noted, these methods often react to threats rather than preventing them outright—leaving gaps that prompt injection mitigation strategies must address.

DeepMind’s Game-Changing Approach to Prompt Injection Mitigation

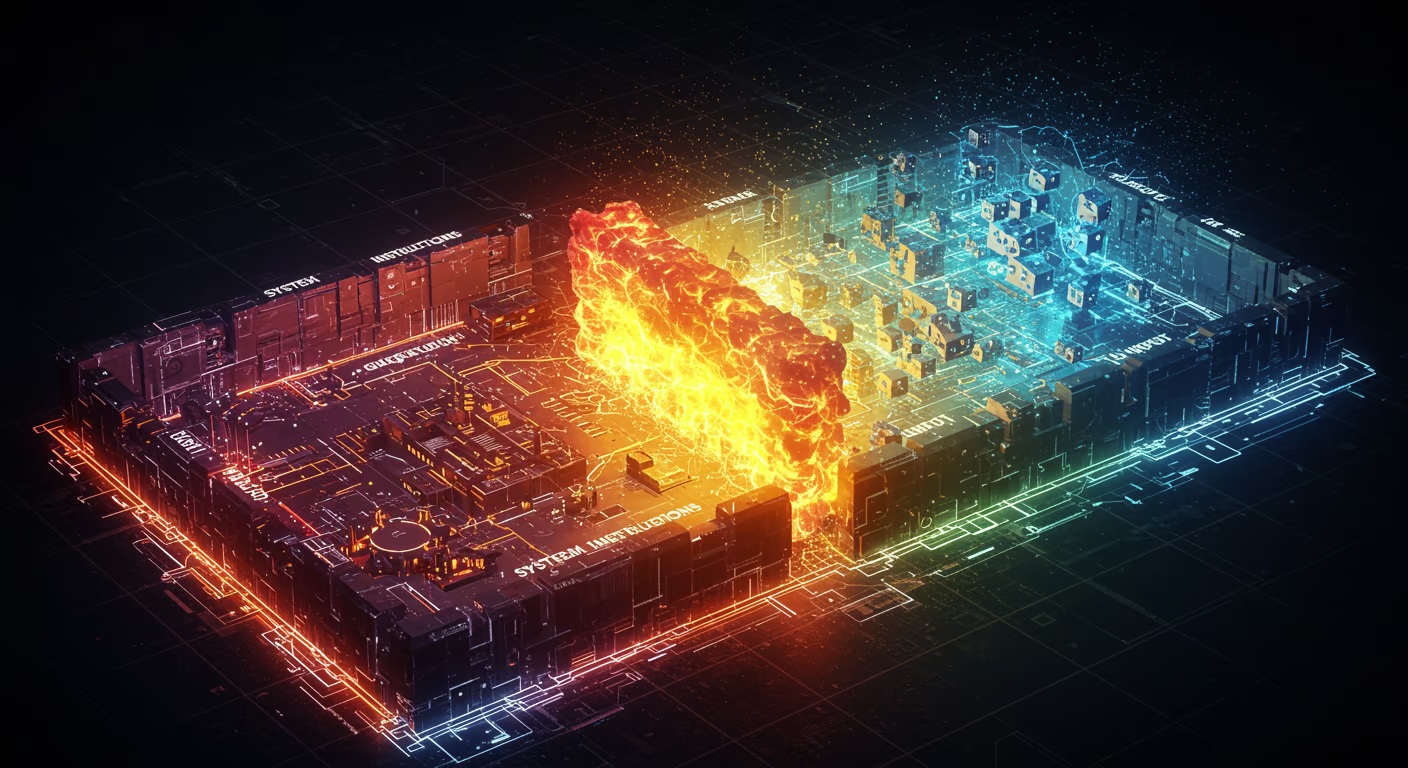

DeepMind has stepped up with an innovative LLM partitioning strategy that rethinks how we handle prompt injection mitigation from the ground up. This method divides the model’s operations into separate “compartments,” ensuring that user inputs can’t easily meddle with core system rules. It’s like giving your AI a fortified wall, where malicious prompts bounce off harmlessly.

How DeepMind’s LLM Partitioning Boosts Prompt Injection Mitigation

- System and User Context Separation: By isolating trusted system instructions from everyday user prompts, this strategy prevents mix-ups that could lead to breaches—think of it as keeping your personal files in a locked drawer away from public access.

- Scope Limitations: Each partition sticks to its role, so even if an attacker slips in a rogue command, it can’t wander into sensitive areas. This is a key win for prompt injection mitigation, as it stops instructions from overriding the model’s built-in safeguards.

- Access Control Rules: Fine-tuned permissions mean partitions only access what’s necessary, reducing the chance of data leaks or misuse. As you can see in the image below, this creates a clear barrier against threats.

Practical Tips for Implementing DeepMind’s LLM Partitioning

Putting prompt injection mitigation into practice with LLM partitioning isn’t just theoretical—it’s actionable. Start by defining clear boundaries in your AI workflows, such as using structured data formats to separate system and user inputs. This way, you can validate queries before they reach the model, catching potential issues early.

Don’t forget to monitor partitions for unusual activity, like sudden spikes in query patterns, and run regular tests with simulated attacks. For teams building AI apps, this means iterating on designs with tools like adversarial testing—it’s a straightforward step that could save you from future headaches. Have you tried stress-testing your AI yet?

Layering Defenses for Stronger Prompt Injection Mitigation

To maximize protection, combine LLM partitioning with other tools, such as output validation and context-aware filters that spot known attack signs. A real-world example: In a customer support chatbot, pairing these with input sanitization could prevent spam from turning into a security breach. This multi-layered approach ensures your AI stays reliable, even against evolving threats.

Internal link: For more on AI security basics, check out our guide on AI Security Fundamentals. And if you’re curious about broader innovations, explore DeepMind’s AI Advances on our site.

What’s Next in the Evolution of Prompt Injection Mitigation

Looking ahead, prompt injection mitigation could get even smarter with zero-shot safety models that detect risks on the fly. Picture an AI that instinctively rejects suspicious prompts without needing prior examples—it’s like giving your system a sixth sense. Other ideas include AI-driven filters that adapt to new threats and community libraries sharing defense tactics for collective growth.

External link: As highlighted in a DeepMind research paper, these advancements are paving the way for safer AI (see DeepMind’s research hub for details). What innovations do you think will shape the future?

Wrapping Up: Building Trust Through Effective Prompt Injection Mitigation

In the end, prompt injection mitigation isn’t just about tech—it’s about creating AI we can rely on. DeepMind’s LLM partitioning strategy offers a solid foundation, blending architectural smarts with practical safeguards to fend off attacks. By adopting these measures and staying vigilant, you can make your AI setups more secure and user-friendly.

So, what are your thoughts on protecting LLMs from these risks? Share your experiences in the comments, explore more AI topics on our site, or pass this along to a colleague facing similar challenges. Let’s keep the conversation going!

References

- [1] A study on prompt injection risks from OWASP, available at OWASP LLM Top 10.

- [2] Insights on adversarial training from a DeepMind publication, via DeepMind Research.

- [3] Input sanitization techniques discussed in a security guide from NIST, at NIST SP 800-53.

- [4] Strategies for system prompts from AI safety resources, referenced in various industry reports.

- [5] Delimiter and access control best practices from OpenAI documentation, at OpenAI Safety Best Practices.

- [7] Community-driven defenses from Hugging Face guides, available at Hugging Face Safety.